Hello and welcome to another premium newsletter. Thanks as ever for subscribing, and please email me at ez@betteroffline.com to say hello.

As I've written again and again, the costs of running generative AI do not make sense. Every single company offering any kind of generative AI service — outside of those offering training data and services like Turing and Surge — is, from every report I can find, losing money, and doing so in a way that heavily suggests that there's no way to improve their margins.

In fact, let me explain an example of how ridiculous everything has got, using points I'll be repeating behind the premium break.

Anysphere is a company that sells a subscription to their AI coding app Cursor, and said app predominantly uses compute from Anthropic via their models Claude Sonnet 4.1 and Opus 4.1. Per Tom Dotan at Newcomer, Cursor sends 100% of their revenue to Anthropic, who then takes that money and puts it into building out Claude Code, a competitor to Cursor. Cursor is Anthropic's largest customer. Cursor is deeply unprofitable, and was that way even before Anthropic chose to add "Service Tiers," jacking up the prices for enterprise apps like Cursor.

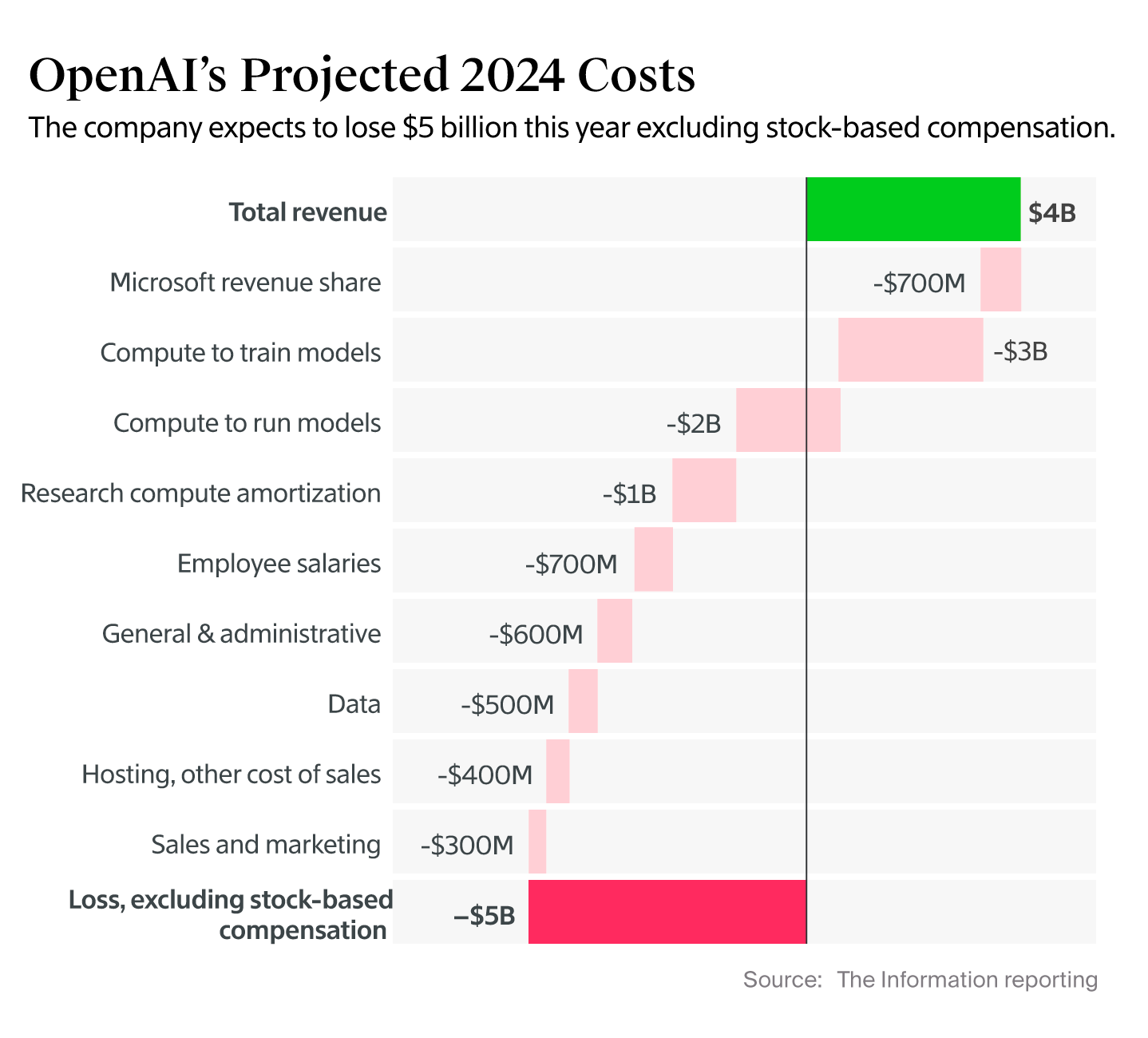

My gut instinct is that this is an industry-wide problem. Perplexity spent 164% of its revenue in 2024 between AWS, Anthropic and OpenAI. And one abstraction higher (as I'll get into), OpenAI spent 50% of its revenue on inference compute costs alone, and 75% of its revenue on training compute too (and ended up spending $9 billion to lose $5 billion). Yes, those numbers add up to more than 100%, that's my god damn point.

Large Language Models are too expensive, to the point that anybody funding an "AI startup" is effectively sending that money to Anthropic or OpenAI, who then immediately send that money to Amazon, Google or Microsoft, who are yet to show that they make any profit on selling it.

Please don't waste your breath saying "costs will come down." They haven't been, and they're not going to.

Despite categorically wrong boosters claiming otherwise, the cost of inference — everything that happens from when you put a prompt in to generate an output from a model — is increasing, in part thanks to the token-heavy generations necessary for "reasoning" models to generate their outputs, and with reasoning being the only way to get "better" outputs, they're here to stay (and continue burning shit tons of tokens).

This has a very, very real consequence. Christopher Mims of the Wall Street Journal reported last week that software company Notion — which offers AI that boils down to "generate stuff, search, meeting notes and research" — had AI costs eat 10% of its profit margins to provide literally the same crap that everybody else does. As I discussed a month or two ago, the increasing cost of AI has begun a kind of subprime AI crisis, where Anthropic and OpenAI are having to charge more for their models and increasing the price on their enterprise customers to boot.

As discussed previously, OpenAI lost $5 billion and Anthropic $5.3 billion in 2024, with OpenAI expecting to lose upwards of $8 billion and Anthropic — somehow — only losing $3 billion in 2025. I have severe doubts that these numbers are realistic, with OpenAI burning at least $3 billion in cash on salaries this year alone, and Anthropic somehow burning two billion dollars less on revenue that has, if you believe its leaks, increased 500% since the beginning of the year. Though I can't say for sure, I expect OpenAI to burn at least $15 billion in compute costs this year alone, and wouldn't be surprised if its burn was $20 billion or more.

At this point, it's becoming obvious that it is not profitable to provide model inference, despite Sam Altman recently saying that OpenAI was. He no doubt is trying to play silly buggers with the concept of gross profit margins — suggesting that inference is "profitable" as long as you don't include training, staff, R&D, sales and marketing, and any other indirect costs.

I will also add that OpenAI pays a discounted rate on its compute.

In any case, we don't even have one — literally one — profitable model developer, one company that was providing these services that wasn't posting a multi-million or billion-dollar loss. In fact, even if you remove the cost of training models from OpenAI's 2024 revenues (provided by The Information), OpenAI would still have lost $2.2 billion fucking dollars.

One of you will say "oh, actually, this is standard accounting." If that's the case, OpenAI had a 10% gross profit margin in 2024, and while OpenAI has leaked that it has a 48% gross profit margin in 2025, Altman also claimed that GPT-5 scared him, comparing it to the Manhattan Project. I do not trust him.

Generative AI has a massive problem that the majority of the tech and business media has been desperately avoiding discussing: that every single company is unprofitable, even those providing the models themselves. Reporters have spent years hand-waving around this issue, insisting that "these companies will just work it out," yet never really explaining how they'd do so other than "the cost of inference will come down" or "new silicon will bring down the cost of compute."

Neither of these things have happened, and it's time to take a harsh look at the rotten economics of the Large Language Model era.

Generative AI companies — OpenAI and Anthropic included — lose millions or billions of dollars, and so do the companies building on top of them, in part because the costs associated with delivering models continue to increase. Integrating Large Language Models into your product already loses you money, at a price where the Large Language Model provider (EG: OpenAI and Anthropic) is losing money.

I believe that generative AI is, at its core, unprofitable, and that no company building their core services on top of models from Anthropic or OpenAI has a path to profitability outside of massive, unrealistic price increases.

The only realistic path forward for generative AI firms is to start charging their users the direct costs for running their services, and I do not believe users will be enthusiastic to do so, because the amount of compute that the average user costs vastly exceeds the amount of money that the company generates from a user each month.

As I'll discuss, I don't believe it's possible for these companies to make a profit even with usage-based pricing, because the outcomes that are required to make things like coding LLMs useful require a lot more compute than is feasible for an individual or business to pay for.

For example, a Cursor user found themselves burning nearly $4 in the space of a few minutes creating a Todo file for the model to follow. AI boosters will argue that this user is "inexperienced" or "used the wrong model," but these are rationalizations of the inherent problems with Large Language Models — that they do not "know" anything, that they are not "intelligent," that they cannot be trusted to execute tasks efficiently or even correctly, and that the core flaws of AI models are such that their only consistent use case is making Anthropic or OpenAI lose money.

I will also go into how ludicrous the economics behind generative AI have become, with companies sending 100% or more of their revenue directly to cloud compute or model providers.

And I'll explain why, at its core, generative AI is antithetical to the way that software is sold, and why I believe this doomed it from the very beginning.